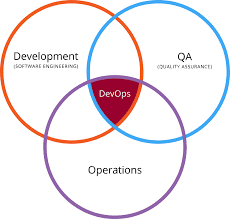

With more applications moving to the cloud, it has become very important for engineers to have a safe, reliable infrastructure on the cloud. That is why the concept of DevOps came into being. Moving away from the hassle of DevOps solutions, some of today’s most popular emerging technologies in cloud solutions is pairing containers with cluster management software. Some of the most used solutions are Apache Mesos, Docker Swarm and the most popular Kubernetes.

Kubernetes is one of the most popular open source container management system for technologies like Docker and rkt. It offers unique features which lets you create portable and scalable app deployment which can be managed, scheduled and maintained easily.

Why use Kubernetes

- The increasing popularity of Kubernetes has lead to it being available on Azure, GCP and AWS as a managed service

- It can speed up development by making automated deployments and rolling updates easy giving services an almost-zero downtime

- Self-healing (automatic restarts, re-scheduling and replicating containers) ensures deployment states are always met

- Since Kubernetes is based on Docker, you can run any kind of applications on it with basic Docker knowledge

- It is open source and managed by a large number of contributors

Getting familiar with the Kubernetes terminology

Lets get familiar with the various components used by Kubernetes

- Pods - group of containers that share storage and network. The containers inside a pod are managed and deployed as a single unit

- Nodes - Worker machines in a Kubernetes cluster are called nodes. A node contains all the required services to host a pod

- Master node - This is the main machine that controls the nodes and acts as the main entrypoint for all admin tasks

- Cluster - Any cluster requires a master node where the master components are installed. These services control workloads, scheduling and communication. They can be distributed on a single machine or with redundancy

- Deployments - Deployments set a state for your pods. They describe how Kubernetes handles the actual deployment. Deployments can be scaled, paused, updated and rolled back.

- Service - Services are responsible for making Pods discoverable inside a network or exposing them

- Kubectl - a CLI tool for Kubernetes we will be referencing in the post

At the end of this post, you should be able to create a cluster and deploy a simple application across the cluster consisting of multiple nodes.

The Kubernetes Getting Started guide helps you through the process of setting up a cluster on multiple platforms like Google Cloud Engine or the Amazon and Azure alternatives. This is a great way to get started instantly. We will set up Kubernetes locally using Minikube VM.

Setting up kubectl

kubectl can be installed in different way depending on your OS and the installation method that you use. These can be found here

To download the latest stable version of kubectl, use the below curl request

curl -Lo https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/darwin/amd64/kubectl > kubectl

Once the installation completes, you need to ensure you change the necessary permissions to allow kubectl to execute and then move the binary into your PATH.

chmod +x kubectlsudo mv kubectl /usr/local/bin

With these values set, you can execute a kubectl command. Now, in order for kubectl to communicate with a cluster, it needs a kubeconfig file which is automatically created when you set up a cluster. By default, config files are located at ~/.kube/config

The kube config file

The config file defines the Kubernetes cluster’s configuration. When the cluster installation script is executed, it creates the .kube directory in the user’s home folder which has a file called config. This file is what holds all the information about the cluster.

The config file holds two main pieces of information

- Location of the cluster

- Auth data for the cluster (authentication mode, uname/password, SSL info etc)

Installing minikube

To install minikube, run the following curls

curl -Lo minikube https://storage.googleapis.com/minikube/releases/v0.8.0/minikube-linux-amd64chmod +x minikube

sudo mv minikube /usr/local/bin/

This will download and install minikube, change its permissions to be executable and move the installation to the correct location.

Starting & administering a cluster

Now that you have minikube installed, you can start the cluster using the following command

minikube start

This will start a single-node cluster. You can now use kubectl along with minikube commands to control and administer the cluster.

If you run the command kubectl get nodes, it will list the nodes (just one in this case), their status and time they have been active.

You can launch the Kubernetes dashboard using minikube dashboard - this will open the dashboard on a browser.

Deploying your first app

So, you now have minikube and kubectl up and running and have started your first cluster. The next step is to deploy an application on Kubernetes.

To run your app on Kubernetes, we will use the kubectl run command. The command requires you to provide the deployment name and app image location. For images hosted outside Docker hub, you need to use full repo URLs.

If you wish to run the app on a specific port, add the --port parameter. So the command would come to be

kubectl run my-sample --image=sample_icon --port=2345

This process did a few steps for you under the hood

- Search for a suitable node where an instance of the app could be run (not very difficult since we only had one node)

- Scheduled the app to run on the selected node

- Config the cluster so it reschedules the instance of the app on a new node if and when needed

To confirm that the deployment was successful, you can use kubectl get deployments command

Creating a service for the app

Pods running inside Kubernetes run on a private, isolated network which are visible to other pods and services in the same cluster, but only within the same network. This is because the port defined for the app is not exposed outside the cluster. This is where we introduce services.

To make our application available outside the cluster, the deployment needs to be exposed as a service. The kubectl command allows you to “expose” the deployment

kubectl expose deployment my-sample --type="NodePort"

The NodePort service type sets all nodes to listen to a specific port.

You can now use kubectl describe service command to see the port at which the service is exposed. Once you know the NodePort, you can use curl to connect to the node.

curl -vk http://<your url here>:<NodePort exposed>

In this post, we learned how to deploy a Kubernetes cluster on a VM, how to deploy an application and make it available to external systems. But this is just the tip of Kubernetes. If you would like to know more about specific topics, do let us know in the comments or get in touch.